Why building a cloud management AI starts with a diagram drawing app

I still remember the first web app I’ve ever deployed. I did what most people were doing back then: I paid a hosting provider for a “v-host” - a tiny folder on a server I shared with hundreds of other users. It ran PHP scripts and allowed access to a small section of a shared MySQL database. It wasn’t fast, had no means of scaling and security was ...well… debatable. But it worked.

In fact, it did more than just work. One can reasonably argue that this already was “the cloud” - a virtual section of a physical server, fully managed by others. In fact, one could even see it as a predecessor of serverless infrastructure and database-as-a-service.

But that would be wrong.

The cloud has long become more than just “someone else’s computer”. Way more. The nature of what AWS, Google Cloud, Azure and a host of other vendors are building is not just a more sophisticated and powerful version of my 2006 v-host - it is something qualitatively entirely different.

And it’s only just beginning.

Every year AWS and its competitors release an average of 23% more new services and functionalities than the year before. Add the exploding ecosystem of open-source, decentralized and “cloud-native” technologies into the mix and what emerges is a technology landscape bigger and more diverse than any before.

But don’t let that fool you. The cloud is not just becoming wider, with more offerings competing for the same use case. It is becoming more interconnected. Cloud services, by their very nature, don’t exist in isolation. They interact, integrate and form intricate, scale-free networks. In a nutshell, cloud services are emerging into a complex system.

Unfortunately, that’s bad news for us.

Humans have, throughout their history, benefited from exposure to powerful networks. But whether it's the global economy, world climate or natural ecosystems, our efficiency when capturing their value is mediocre at best.

Yet, more often than not, we’re not even satisfied with just capturing the network’s value. We’re trying to manage it. And that's where things really get messy. As countless economic and ecological crises, centrally regulated mass-starvations and catastrophic “bring a cane toad to Australia to eat the bugs” experiments have made clear - we’re absolutely terrible at running complex systems.

And we will be absolutely terrible at running future versions of the cloud.

Fortunately for us, machines won't be. De-facto unlimited parallelism in conjunction with self-reinforced deep learning enables us to create powerful agents; machines that are complex networks in their own right and thus ideally suited to govern the dynamic structures that are today's and tomorrow's clouds.

Arcentry is a first attempt at building such a machine.

But not right away. Like many AI companies we will follow two golden rules:

1. Don't start with the AI, start with the data.

Students need teachers and textbooks, researchers need samples and observations. Machines are no different. As Google and Facebook can testify, the pre-condition for any knowledgeable AI is a large, high-quality dataset. And the way of obtaining it is to create an app or platform that generates it.

2. Don't wait until you can ship the AI

The buzz around deep learning, neural nets, and virtual assistants implies that AI is a fully functioning and mature set of technologies. Nothing could be further from the truth. ML technologies are still very much in their infancy and so far we're only scratching the surface of their potential.

Consequently, it still takes a long time to train an AI to the point of consistently providing value. More time than most startups have.

Luckily, the context in which an AI operates - its input stream of data and the platforms and applications that generate it, as well as its output capabilities - the information it can produce and the actions it can invoke - can be just as valuable to a human user. Arcentry will focus on iteratively providing this value to the end-user whilst simultaneously building up a dataset to train its AI. Here's how:

1. Arcentry today

Arcentry soft-launched on Tuesday, 17.7.2018. Its initial iteration is focused on helping users stay on top of the complexity of open source and cloud architectures by creating isometric diagrams. It combines a feature-rich and fun to use interface with hundreds of open-source, AWS, Azure and Google Cloud components to create interactive, fully 3D diagrams that can be exported as high-res images for presentations, blog posts, and developer platforms.

2. Collaboration & Workflow management

Arcentry's next major release will extend the diagramming aspect with real-time collaboration, social sharing, and embedding of 3D diagrams for blogs and websites as well as sophisticated workflow management tools, enabling enterprise customers to model multi-stage deployments, testing, approval, and auditing directly from the app.

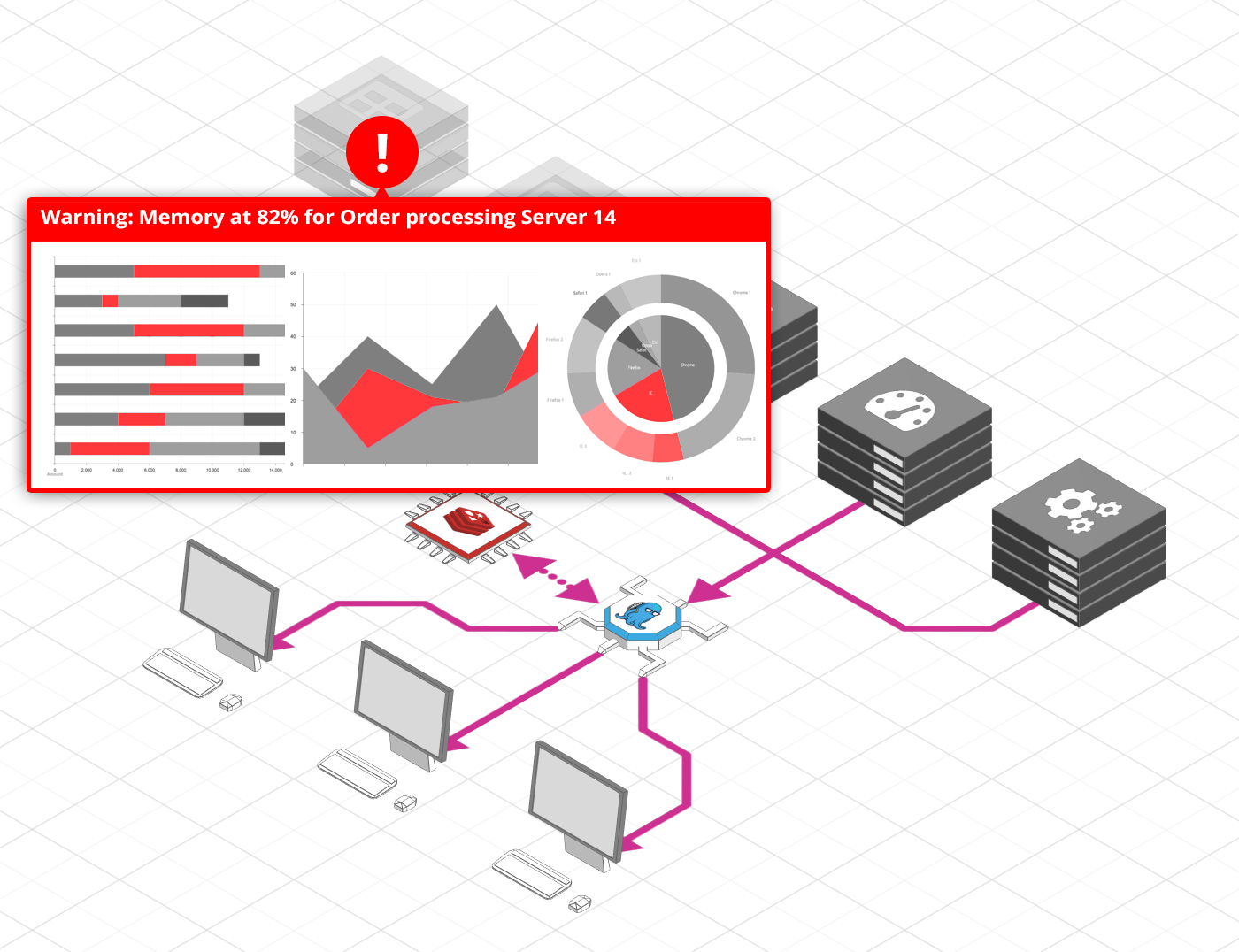

3. Monitoring

Seamless real-time monitoring reduces app downtime and increases reliability by helping DevOps teams diagnose problems faster and apply more targeted fixes. The current generation of monitoring tools, however, is struggling to cope with the dynamic nature of autoscaling cloud deployments and - crucially - falls short when it comes to exposing problems in the context of their wider architecture. Both these abilities are a natural part of Arcentry and make it a good fit for integration with time-series-databases, HTTP-agents and monitoring services alike to provide a single, holistic picture of the current state of a cloud architecture.

4. Importing / Deploying architectures from Arcentry

Crossing the chasm between idea and reality of a cloud deployment will enable users to gain a better overview of their infrastructure, avoid common security pitfalls and - most importantly - dramatically reduce the time and risk associated with deployment and change management. Arcentry will be able to read and visualize the structure of existing clouds as well as gain the ability to deploy new architectures - in whole or in part - either via directly integrating with cloud-provider APIs or via an intermediate template such as Terraform or Cloudformation.

5. AI-assisted/driven design, deployment & monitoring

At this stage, we will have accumulated a rich dataset about the architectures users create, a well as a definition of their "success" (architecture repeatedly deployed to production - see 4.) and "efficiency" (resources being under/overutilized - see 3.). This will equip Arcentry with a robust machine learning dataset, including both a wide array of inputs as well as their desirability and effectiveness. Based on this we'll initially role out an AI-driven virtual assistant, capable of suggesting improvements to architectures during their design phase. As confidence in this assistant grows, we will gradually grant it more influence - from the ability to monitor production architectures and pre-emptively scale segments in anticipation of future demand all the way to full-on AI designed clusters, AI-monitored self-healing deployments and continues improvements to both efficiency and scalability without the need for human intervention.