What comes after serverless?

As humans, we have this weird tendency: We believe that however things are right now, that's the way they'll always be. It's not that we don't acknowledge the existence of change or past progress - but somehow, we rationalize that the status quo necessarily constitutes a peak or final stage in its development and we're struggling to imagine how it could ever be superseded.

This seems particularly true for discussions about serverless lately: It's just an isolated function - right? How much less could you possibly run?

Well - it's not so much about the code itself - it's about its ecosystem and how its run. Today, serverless means writing your code as functions, deploying it to a major cloud provider (AWS, Azure, GCC), setting up a number of external concepts for state (databases, caches), routing (API gateways), validation and identity management and so on.

A lot has been written (e.g. by us) about the complexities of the current approach and I strongly believe that the immediate future of serverless is simply easier serverless as pioneered by companies such as zeit.co.

But what comes after that? If I was to hazard a guess, it will be networked computation:

Networked Computation?

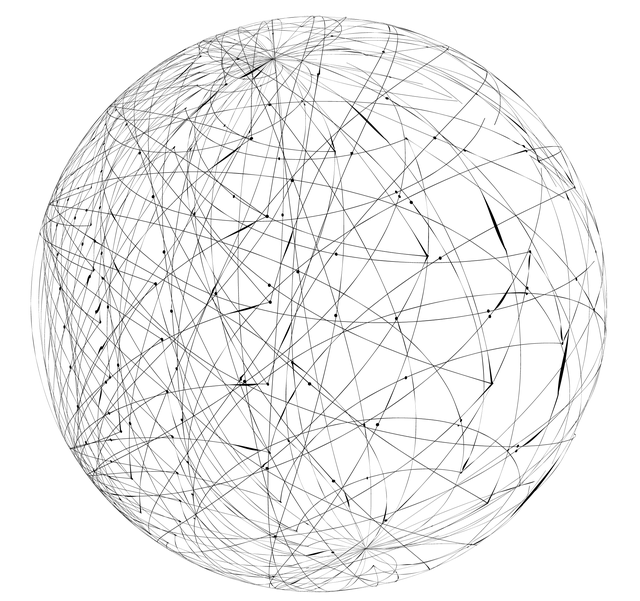

Computing power has become ubiquitous. If you make a request to the internet, pretty much every step along its way is a fully capable (and almost always underutilized) computer. From your browser to your smartphone, your network provider's gateway, the various hops along the way to the many machines that compose your cloud provider's infrastructure - all have the ability and most likely the capacity to execute a function.

We've only recently started making use of these in an approach heralded as "Edge Computing" - but really, it's just common sense.

Many of these computers are able to run our function sooner - and probably at lower costs - than our cloud provider's remote datacentre.

But of course, things aren't that easy. Our code doesn't live and run in isolation. In order to truly utilize the resources at our disposal, we need to find ways to load dependencies, access distributed state and data storage, ensure strong consistency, interact with a host of services and APIs and do all this in a secure and reliable manner.

This is hard - but not impossible. The very foundations of the internet are built to facilitate efficient communication between decentralized nodes. If we get this right we might be able to deploy and use services faster, cheaper and more efficiently than with the serverless offerings currently at our disposal. And we might do so with the same - or even less - hardware and infrastructure than we have today.

But there's a catch:

As information-age-romantics, we like to believe in a truly free, egalitarian and hence decentralized internet. Even Tim Berners-Lee, widely credited with inventing the World Wide Web itself has gone out of his way to reshape it according to its original, decentralized principles.

But this might just not happen.

Whilst decentralization focused projects like Ethereum or IPFS undeniably enjoy some measure of success, they are still a mere speck of dust when compared to incumbent rivals like Amazon, Google or Microsoft.

A reason is that central nodes within scale-free networks grow relative to their own size - or exponential in absolute terms. Startups know this only too well. If you have a hundred users, gaining another 20 is hard work. If you have a million users, the next twenty are just around the corner. True decentralization means a necessary cap to the size and interconnectedness of any given node, making growth a cumbersome process, highly vulnerable to disruption from centralized players.

So for the networked computation approach described above to work, we'd either have to overcome the market power of established cloud providers (unlikely) or help cloud providers find a way to wave it into their services (granular VPNs, widespread edge VMs etc.)

But however we do it - I feel there might be a reasonable chance that at some point our next Twitter page load will be answered by the idle processor core in our neighbor's thermostat.